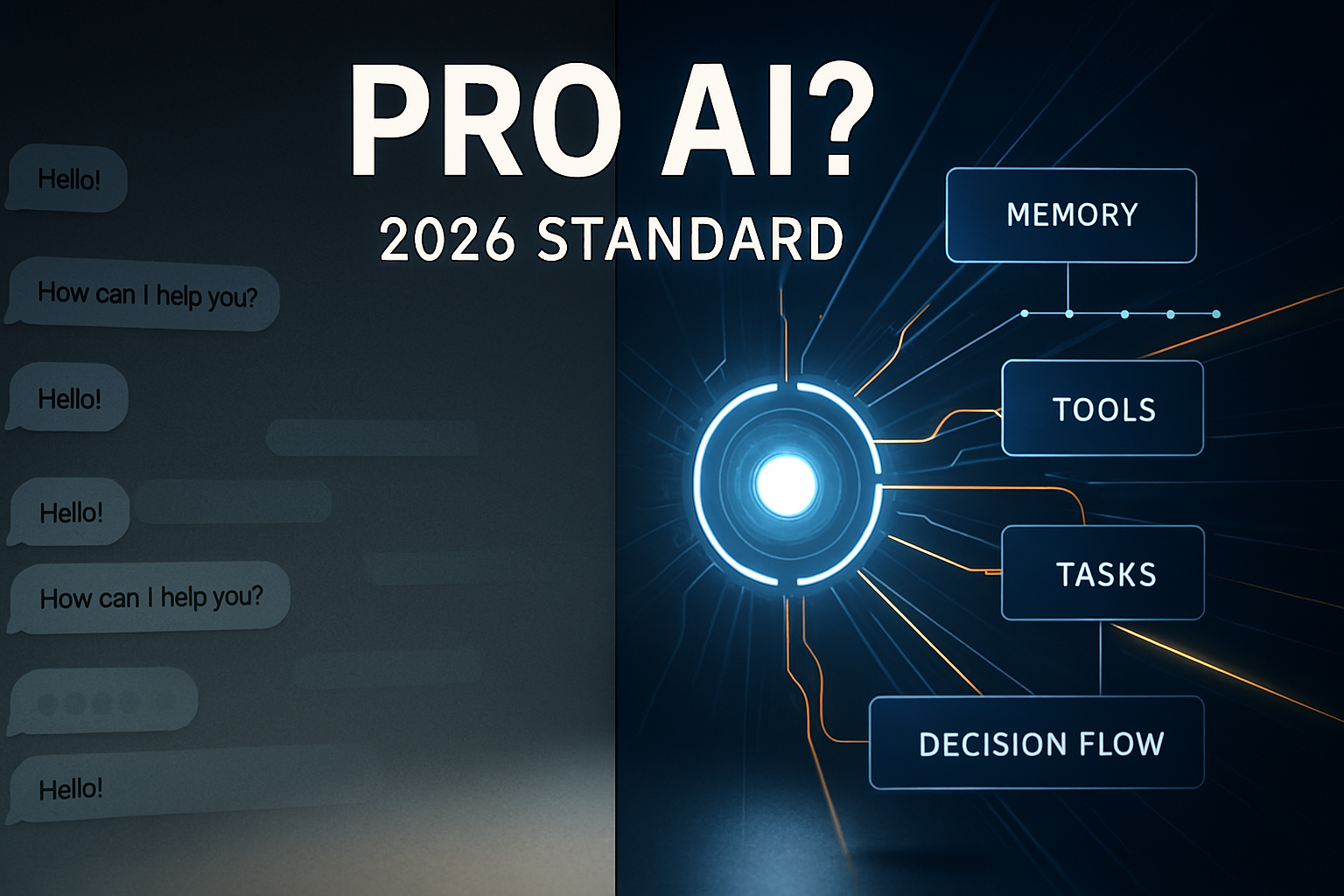

We’ve all been there: colliding with the frustratingly rigid guardrails of a scripted chatbot that can only answer a narrow set of questions. While helpful for simple queries, these tools fall short of being true assistants. But a new “pro” standard for AI is emerging, one that will define the professional assistant of 2026. This isn’t just an upgrade; it’s a fundamental reinvention. Here are the three shifts that separate these advanced “agentic AIs” from the chatbots we know today.

——————————————————————————–

1. They Don’t Just Talk, They Act.

The most significant evolution is the paradigm shift from conversation to action. Outdated chatbots are confined to predefined scripts, but the next generation functions as “Autonomous AI Agents.” This concept, also known as “agentic AI,” centers on the ability to set goals, make decisions, and execute multi-step tasks across different applications without constant human intervention.

The key difference is actionability. Instead of just providing information, these assistants get things done. This empowers the AI to execute high-value tasks like updating a customer record in your CRM, sending a follow-up email after a call, scheduling a meeting across multiple calendars, or generating a sales report by pulling data from disparate sources. To accomplish this, they rely on deep integration with a company’s existing tech stack. By connecting seamlessly with platforms like Salesforce, Slack, Microsoft 365, and Google Workspace, the AI can orchestrate complex workflows between them.

The strategic implication here is that the AI transforms from a tool you consult into a teammate you delegate to. It’s no longer a passive information source but an autonomous contributor, actively monitoring digital environments and initiating workflows when triggered by real-world events. This is the transition to genuine automation.

——————————————————————————–

2. They Don’t Just Read, They Perceive.

Yesterday’s AI assistants were limited to text. The 2026 pro standard is multimodal, meaning it can process, understand, and use various data formats—including text, images, audio, and even video—within a single interaction. This creates a far more natural and powerful user experience by removing the cognitive load of having to “speak the computer’s language.”

This new perceptual ability manifests in several ways. Users can engage in sophisticated, multi-turn voice conversations, allowing for hands-free operation while driving or multitasking. Furthermore, the assistant can “see” and interpret visual data. You could upload a screenshot for troubleshooting instructions or submit a photo of an invoice to have the data automatically extracted and entered into a system.

This seamless flow removes communication barriers, allowing users to interact with data as naturally as they would with a human colleague. The AI now meets us where we are, dissolving the boundary between user and tool for a truly intuitive interface.

——————————————————————————–

3. They Don’t Just Forget, They Remember.

One of the biggest frustrations with chatbots is their amnesia; they forget everything the moment a conversation ends. In contrast, a pro assistant possesses persistent, long-term memory, allowing it to build context and understanding over time. This memory isn’t just for a single session—it can extend over months or even years, enabling the AI to recall past conversations and user preferences.

This leads to far more personalized and relevant interactions. Furthermore, these assistants provide grounded responses. To mitigate the risk of “hallucinations,” they base their answers on trusted, verified sources—like internal knowledge bases or real-time web searches—and provide citations for verification. This ensures the information they deliver is both accurate and current.

The combination of long-term memory and verifiable accuracy builds trust. The AI evolves from a generic utility into a reliable, personalized partner that continuously learns from interactions to not only tailor its responses but also proactively anticipate user needs.

——————————————————————————–

Conclusion: The Dawn of the Do-Everything Assistant

These three pillars—autonomy, perception, and memory—are not mere upgrades; they represent a complete re-architecting of what an AI assistant is and does. The transformation is from a simple conversationalist to an autonomous agent, from a text-only tool to a multimodal perceiver, and from a forgetful utility to a context-aware partner you can trust.

As AI assistants begin to not only understand but also act on our behalf, how will it redefine our daily work and productivity?